GestureLab X__ concept

DIGITAL GESTURES

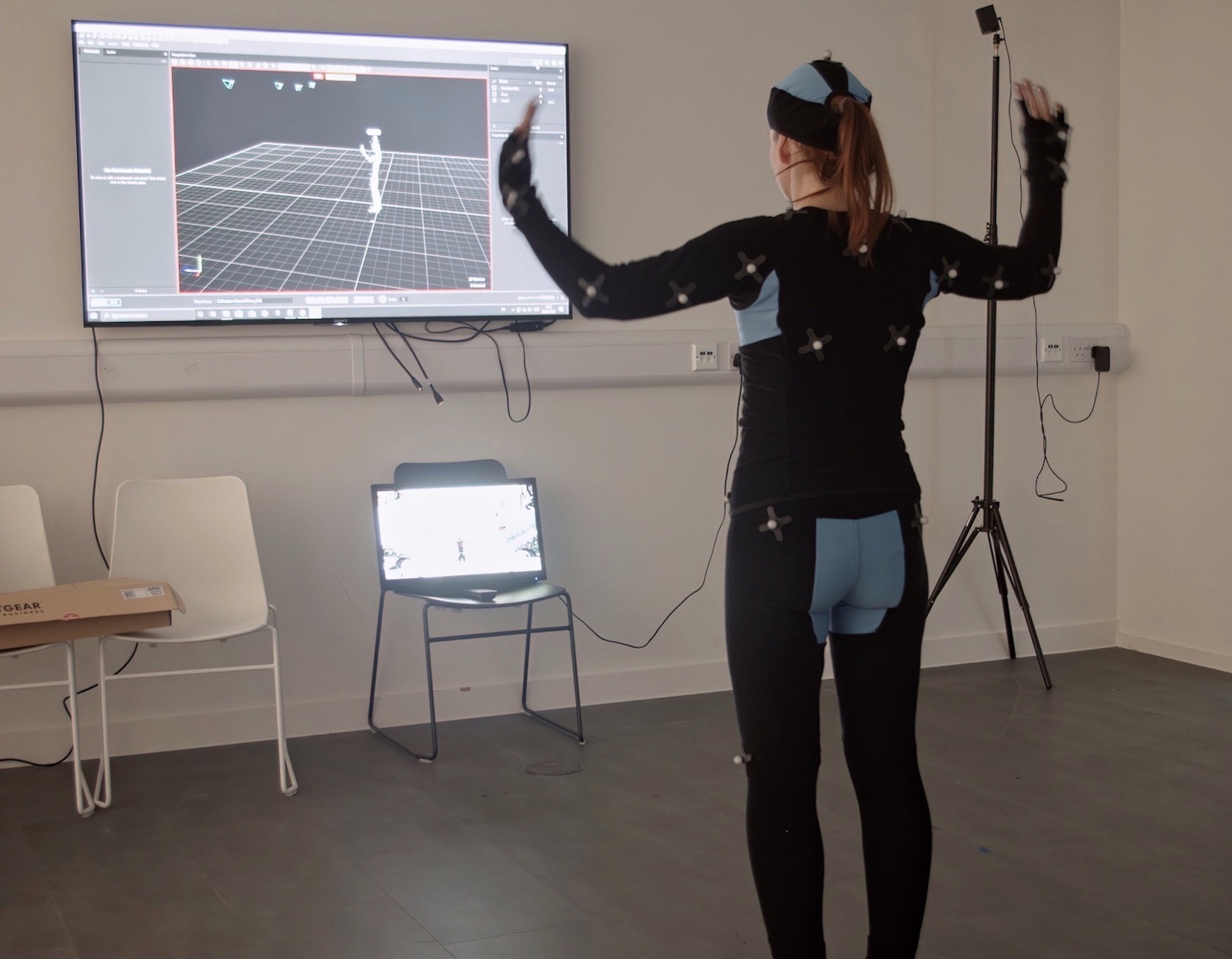

GestureLab Y_ proposition for DIGITAL GESTURES, Royal College of Art, 2020.

_____Working gesture: from biofeedback to soundscapes

I wish to develop an approach, which uses EMG/EKG sensors, contact mics and accelerometers as acoustic pickups of body gestures and propose a model, which translates data into gestic soundscapes.

Instead of using expensive ready-made product like Bitulino or Myo, I wish to work with DIY sensors and Arduino boards. This would significantly lower the cost, allow for different organisation/sharing between participants and reduce a chance of becoming obsolete.

Current open source solutions, which translate data from Myo into OSC or Midi controller, focus mostly on delivering audio-visual performance. Additionally, Myo gesture armband has now been discontinued. I am interested in drawing on these sources yet the intention is not to create musical score per se but to generate echoes of existing labours while they are enacted. I wish to combine translated echoes with actual sounds of internal bodily effort as picked up through contact mics or MEMS accelerometers. I envisage a range of soundscapes from a gesture of hairdresser to that of a pool cleaner, from gestic professional to public mimicking the actions.

There are therefore distinct stages to the project: 1) learning how to build DYI body sensors and retrieve data; 2) developing methods to translate biofeedback into acoustic outputs. Both stages involve selecting hardware/software, learning basic assembly, coding, MIDI principles and testing. I will work with physical computing and sound technicians (John Wild, Ioannis Galatos) and participants/volunteers from previous GestureLabs.

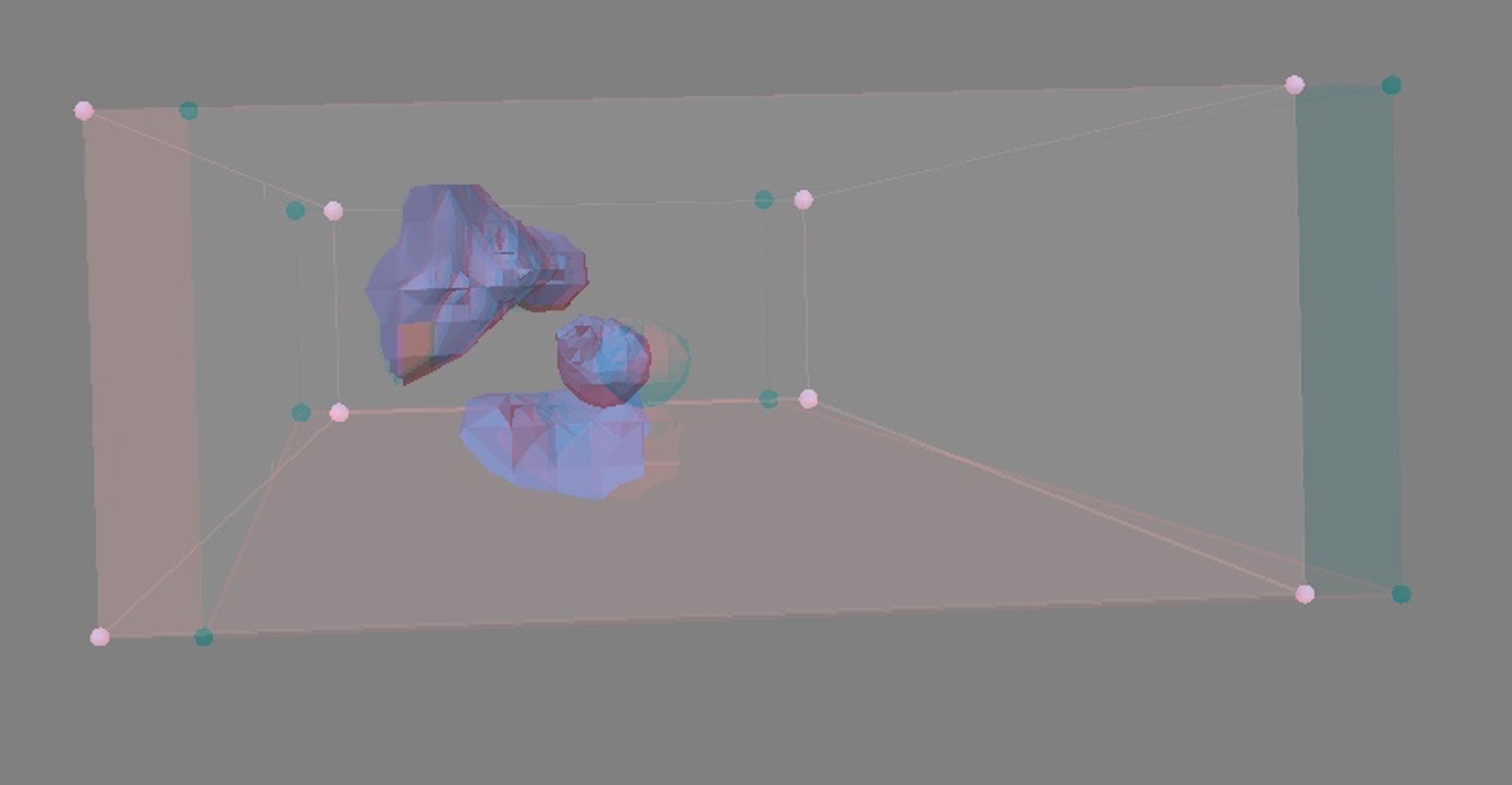

Space of a Haircut: Between the Acromion and the Neurocranium

Image modelled from a photograhic documentation of haircutting using photogrammetry software.

2018, Image @artist own